While generative AI accelerates space domain awareness (SDA), going further to link GenAI via multiple large language models (LLMs) delivers revolutionary advantages—allowing space operators to rapidly manage assets, prevent collisions, and determine intent.

But as our team sees every day, GenAI needs human insights to get SDA right. Take the example of Booz Allen i2S2, our Integrated, Intelligent Space Domain Awareness-Space Traffic Management solution.

Successfully linking GenAI depends on the other two members of the triad: the operator and the nerd. Meet an example of each.

THE OPERATOR: Jim Reilly, former astronaut, previous head of U.S. Geological Survey, and Booz Allen executive advisor

I’m an ops guy. When I look at GenAI, my first thought is, “What can I do with it?” If I see an AI-powered space robot, I think, “Could it be programmed to look for water-based mineral assemblages on Mars?” If a rover could do the complex reconnaissance for me, I could move faster with actionable findings.

Regarding Booz Allen i2S2, my colleagues on the technical side know the challenges of improving SDA, so it was natural for us to turn to LLMs to address critical problems in this area. As satellites proliferate and space becomes weaponized, space domain awareness needs to improve fast. NASA’s near-miss with Russian space junk is a good example of that urgent need.

What Do I Need to Know—and When?

For me, operational needs can be boiled down to, “What do I need to know, and when do I need to know it?” In the case of SDA, it’s all about speed of decision: Where are space objects I don’t want to fly into, and how can I maneuver to avoid running into them? And in the new era of contested space, we need to be able to identify intent: Is that a piece of space junk aimlessly drifting, or is it an adversarial satellite moving in for the attack?

Combining this operational need with the idea of linking LLMs together was the core of our idea for our SDA solution. While operations and AI architects working together is not a new idea, the speed and specificity required to create tomorrow’s technologies requires a deep understanding.

How Can I Fuse and Customize Military, Civil, and Commercial Space Data—for My Mission?

Fusing disparate data and being able to disseminate AI-powered information tailored to different users is a technical advance that moves missions forward. That means that I, as the user, get actionable information faster and in ways never before possible. But all that power is only going to serve me if the data scientists understand my point of view as an operator: what I do and how I think.

As my colleague Pat Biltgen said at Booz Allen’s Space+AI Summit, most of the successful systems in the Department of Defense are the result of what he called “the operator and the nerd” working together. He gave the example of translating requirements into specifics, where the conversation gets granular: “Does maintaining custody of an object mean you need to see it once an hour, or every 5 minutes?”

For Booz Allen i2S2, the engineering team took that perspective to heart. They sat down with operations types like me and got our input and feedback at the beginning of the process. They made sure they understood the users’ tech stack so we could develop an open-architecture solution that would work well with it, while making the components flexible so users from other space organizations could easily access it on their laptops.

The team developed prototypes rapidly and then ran them iteratively by the stakeholders, tweaking and adjusting with each version.

What Do I Want to Do for Myself, and What Do I Want to Automate?

One reason we operators need to figure out not only what, but when we need to know something, is so we can explain our priorities to the AI architects. For example, what decisions do we want the system to make, to free us from cognitive overload? And which do we want to focus our attention on, in situations where every second counts?

It’s the same principle we used at NASA when I led a team designing data displays for the International Space Station. Our philosophy was: Let’s get information to the human in a qualitative way, prioritizing it by when it needs to be known—and whether the human needs to do something about it or simply be informed.

How Do I Know?—On-Dashboard Information, Alerts, and Courses of Action

Booz Allen data experts worked with operators like me to discover what elements needed to be easily accessed from the solution’s dashboard: probabilities of collision, adversarial intent, and more. We also needed the interface to deliver automated alerts with recommendations for optimal courses of action.

The delivery team added extra features such as language translation and the option for voice activation. The dashboard and attributes can be customized—that’s the beauty of modularity. And it means that I—the operator—am more in control than ever before.

THE NERD: Pat Biltgen, Booz Allen Vice President for Space Solutions, AI and Mission Engineer

GenAI is a tool, but the more it adapts to me, the more I find myself treating it like another person. Not that I trust everything it tells me, but I do find myself asking it questions I’d ask a human, like, “Why do you say that?”

Leading AI projects for space agencies, I see every day what a “What if?” mindset will do—especially when combined with clear processes and a little patience.

Why “Get the Operator and the Nerd in the Same Room”?

I like to say, “We have to get the operator and the nerd in the same room.” We sit with operators and watch them at work. Our approach is, “Show me what you are doing and let me try to understand how I might make it better.” As the largest provider of AI solutions to the federal government, Booz Allen specializes in teams that know the mission, yet our people still need to dive into each specific need.

Even simple interface tweaks save users precious time. For example, we noticed operators having to manually look up a given satellite’s attributes, so we created a “baseball card” animation, with the satellite name on one side that, when clicked, flipped to view that satellite’s details on the reverse.

How Can I Earn the Operator’s Trust?

A high-level example of knowing your client: I lead systems engineering efforts for an SDA analytics framework for a national security client. I know not only their goals but also why there can be no tolerance for error: The stakes are too high. A solution can be trusted only after it has been tested and proven itself over time. If it’s wrong even on a minor point, operators won’t trust it on a major one.

A nerd will say, “I tested this under varied conditions and it’s 99.999% accurate.” Operators will say, “I still don’t trust it.” They have an intuition informed by all the subtleties that reveal themselves to the person who actually works with it. And it goes without saying every project must have guardrails and all the other elements that go into responsible AI.

How Do LLMs Enable Greater Sense-Making?

We’ve been working on AI solutions for SDA for years. In 2019 we created a propulsion classifier which used the physics of velocity to ascertain if a space object had an engine, for example. And in our work for critical missions, we developed data innovations like cross domain solutions, multi-int fusion, and our AI software toolkit. So when LLMs made data advances possible, we were ready to dive in.

Speed is critical. To take Jim Reilly’s example: Is that object a piece of space junk aimlessly drifting? Or is it an adversary satellite just pretending to be space junk? I think of the “zombie satellite,” Russia’s Resurs-P asset that drifted so long it was considered nonfunctional—until it suddenly “came to life,” changed its orbit, and approached a Russian military satellite. We need the capability to ascertain or at minimum suggest intent now.

Linking LLMs: How Can We Fuse and Share Data Between Intelligence, Defense, and Civil Organizations?

Fusing space data from disparate sources and applying AI for awareness, tracking and alerts, and intent determination was a capability we’d been wanting to do. We jumped on the LLM breakthrough and began fast development to link LLMs together to enable data sharing between classification levels.

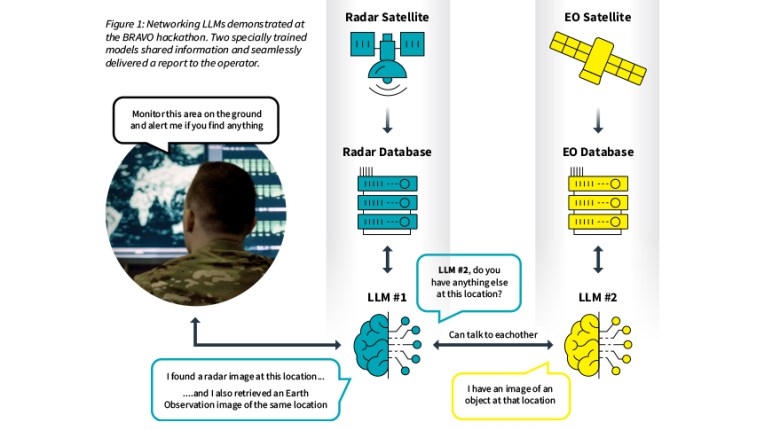

It paid off. At the Air Force’s spring 2023 BRAVO hackathon, Collin Paran, our AI solutions architect for the capability, led a team to win best data visualization and best user interface for linking two LLMs in a classified environment using zero trust protocols.

The LLMs, each a powerful, proven learner—one trained in radar sensor data, the other trained in Earth observation imagery—shared data with each other. The LLM with the radar expertise was designated as the moderator, and it provided a fast, consolidated answer to the operator through a chat interface.

THE GENERATIVE AI SOLUTION: Solutions Architect Collin Paran Introduces Booz Allen i2S2

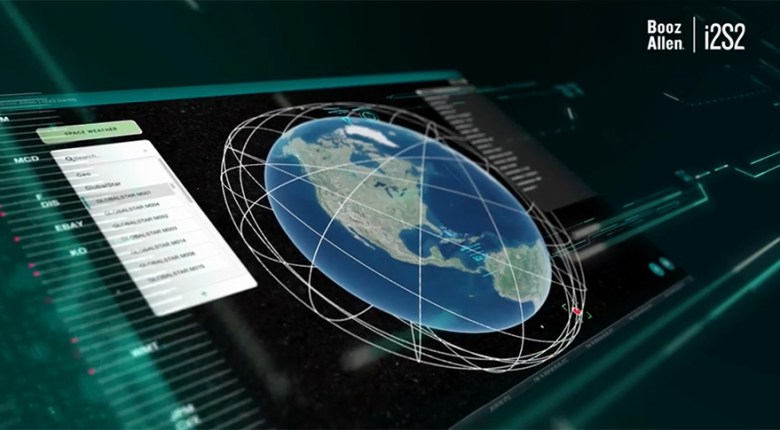

It was thrilling to see the linked-LLM idea succeed in the hackathon’s classified environment. Encouraged by the BRAVO win, we built on the concept to develop a secure mobile solution employing multiple AI models—as far as we know, a first for the classified space. We overlaid the data with astrodynamics and SDA space traffic management analysis to deliver comprehensive insights into space behaviors and threats.

As Ron Craig, our vice president for space solutions and strategy, points out, “Every space leader we talk to needs smarter data, faster.” And we know that defense organizations, intelligence agencies, and commercial space businesses all have different goals, as well as missions that must adapt to new threats and priorities. So flexibility was table stakes.

Accordingly, we developed a flexible open-architecture framework allowing us to employ agents with varying expertise and train them to work together—for example, an orbital expert agent and a missile warning agent.

For the user experience, we designed a conversational interface so operators can rapidly receive information, alerts, and recommended courses of action. On the front end, the operator chats with the concierge agent, which communicates with the others and delivers consolidated feedback. The interface, a chatbot which accommodates multiple languages, can be modified—for example, to communicate via voice commands.

On the back end, the solution continually ingests the most recent SDA-STM observation and space environment data, overlays it with threat intelligence, and incorporates the most recent owner and operator ephemeris, vehicle state data, and maneuver plans. It also executes high-fidelity propagation models that incorporate near real-time drag predictions, increasing accuracy while reducing false alerts.

The solution applies AI and machine learning (AI/ML) to all that valuable data, automatically generating recommended courses of action—highlighting the option that presents the least risks or requires the least fuel, for example.

We launched the solution at April’s Space Symposium. Our team loved seeing space operators excited about both its technical capabilities and how easy it is for humans to test-drive. It’s a breakthrough made possible by the complementary abilities of the powerful triad of operations specialists, AI architects, and GenAI.

Booz Allen i2S2: Integrated, Intelligent SDA-STM

Using multiple linked LLMs, Booz Allen i2S2 continually fuses classified and unclassified data to provide defense, intelligence, and commercial space organizations with fast, accurate data tailored to their mission.

It accelerates space decisions by using AI/ML to continually fuse multiorbit, multisensor, multisource data and evaluate threats.

- Helps stakeholders manage space assets, prevent collisions, and determine satellite intent

- Provides timely alerts with a range of courses of action for safety, avoidance, deterrence, and defense

- Incorporates space weather/drag modeling, as well as propagation and conjunction tools for pinpoint orbit determination

- Leverages open-architecture frameworks using cloud-agnostic platforms for scalability, flexibility, and ease of use

Read our previous article: Kennedy Space Center viewing gantry gaining rocket engine test sim in 2025