Artificial intelligence is being developed to provide a robotic brain for a future NASA mission to land on the icy surface of one of the solar system’s ocean moons, such as Europa or Enceladus.

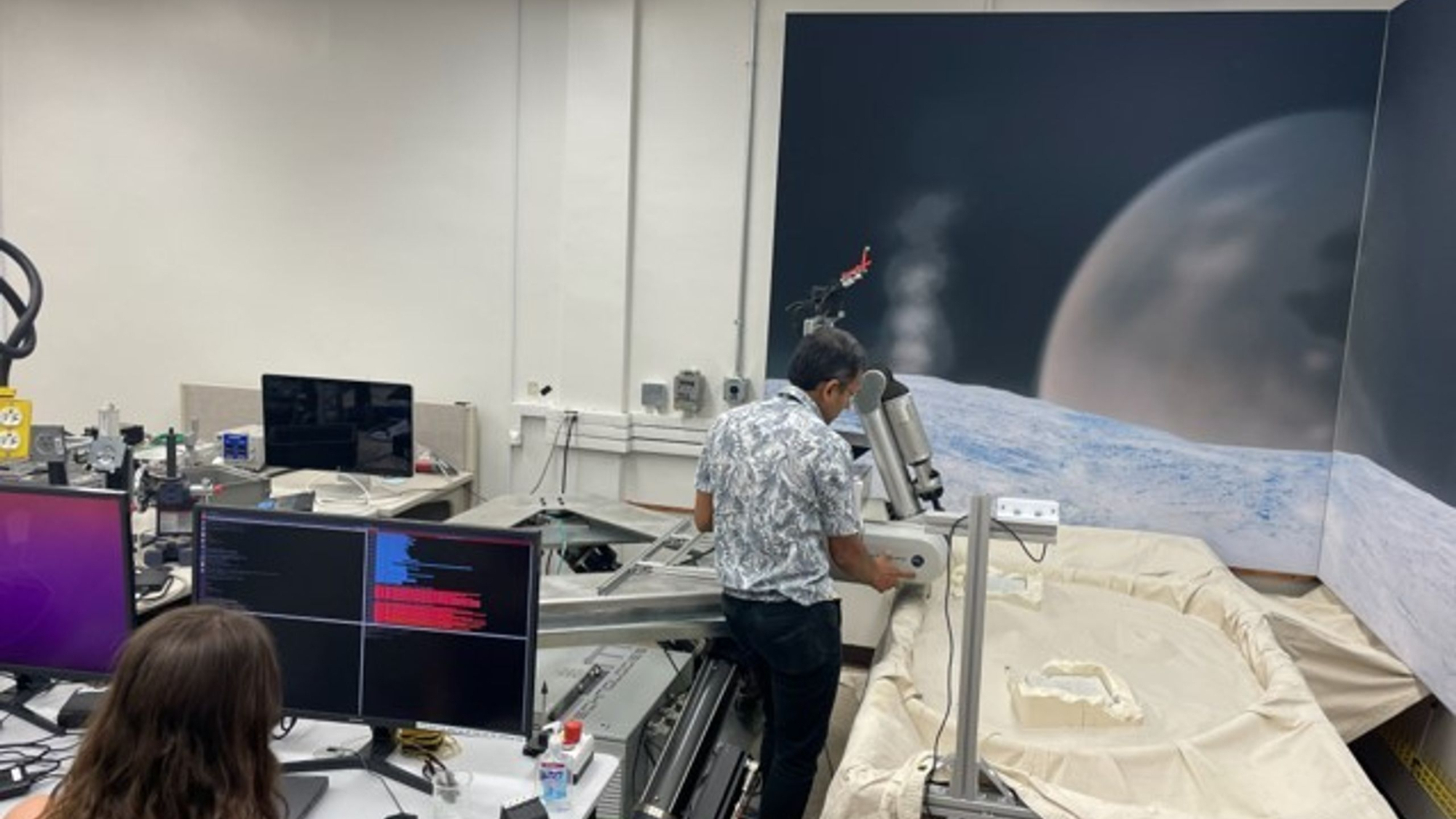

The autonomous software is being developed by teams of researchers who are making use of a robotic arm, mimicking that belonging to a lander or rover, and a virtual reality simulation at NASA’s Jet Propulsion Laboratory (JPL) and Ames Research Center, respectively.

Imagine that you’re a robotic lander designed to study Jupiter‘s moon Europa, which hosts a deep water ocean far beneath its icy surface. You’ve braved the radiation belts of the giant planet, your retrorockets have fired and you’ve safely touched down onto the ice. Except — perhaps the terrain is more hazardous than you realized it was going to be, with large ice boulders or deep ravines. Maybe the properties of the ice here are different — harder, or thinner, or broken up by micrometeorite impacts.

You try to take a sample, but your scoop gets snagged on something, or your drill becomes jammed in the ice. You’ve got a problem — and it could take up to 53 minutes, depending upon where Earth and Jupiter are in their respective orbits, before your team back on Earth knows anything about it, and at best another 53 minutes, probably longer, before they send you commands. By that time, your drill might have broken, or you might have tumbled into a ravine. How much better for your survival would it be if you could make some decisions on your own?

Related: Watch this Jupiter moon lander handle harsh terrain it may face on Europa (video)

That’s what engineers and planetary scientists at NASA are hoping to achieve with two agency-funded programs that aim to develop autonomous software, trained using machine learning, reasoning and generative artificial intelligence, for future lander and rover missions to the ocean moons.

These programs are the Ocean Worlds Lander Autonomy Testbed (OWLAT), which is a robotic setup at JPL, and the Ocean Worlds Autonomy Testbed for Exploration, Research and Simulation (OceanWATERS), which is a purely virtual-reality tool at NASA Ames

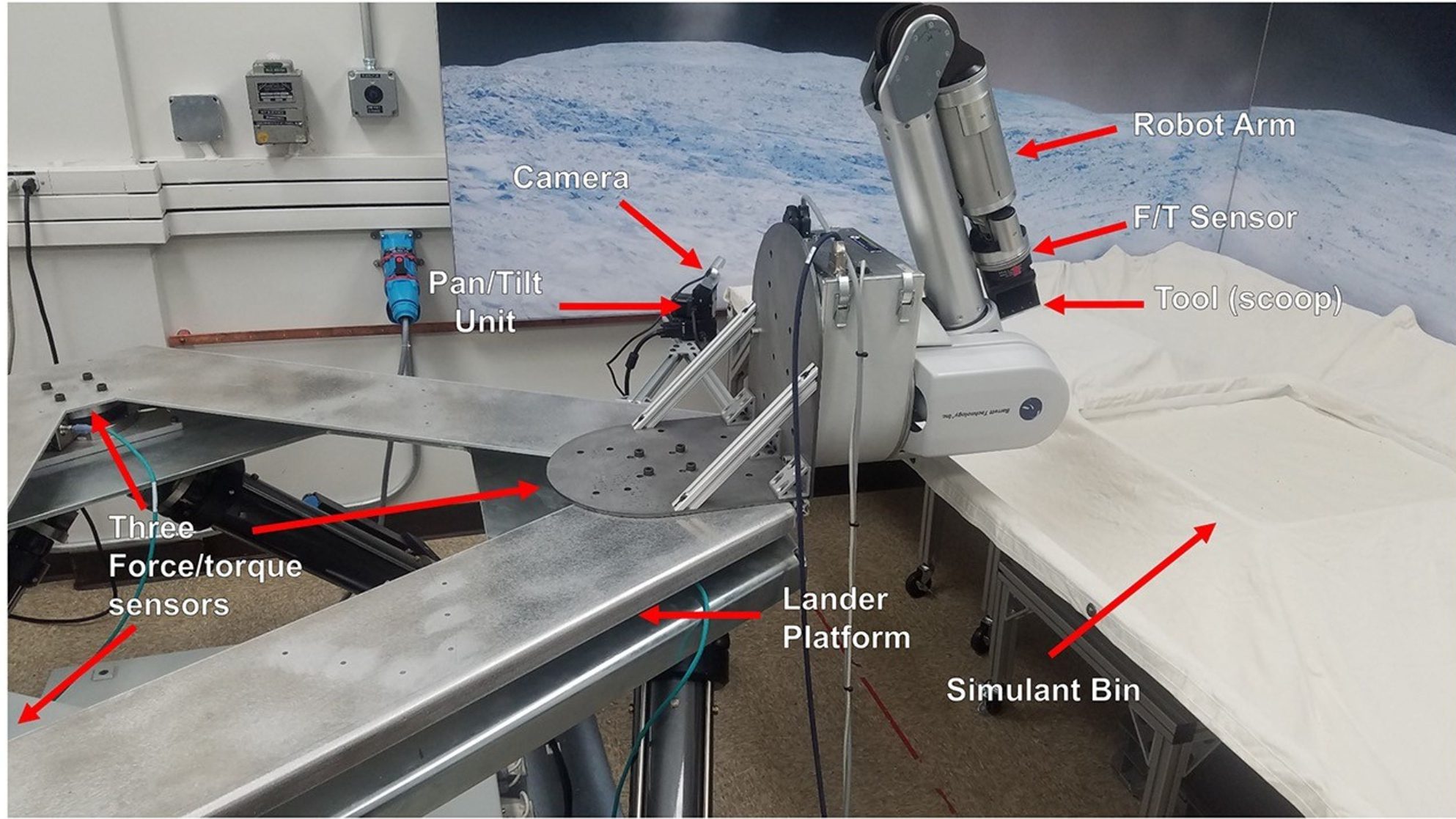

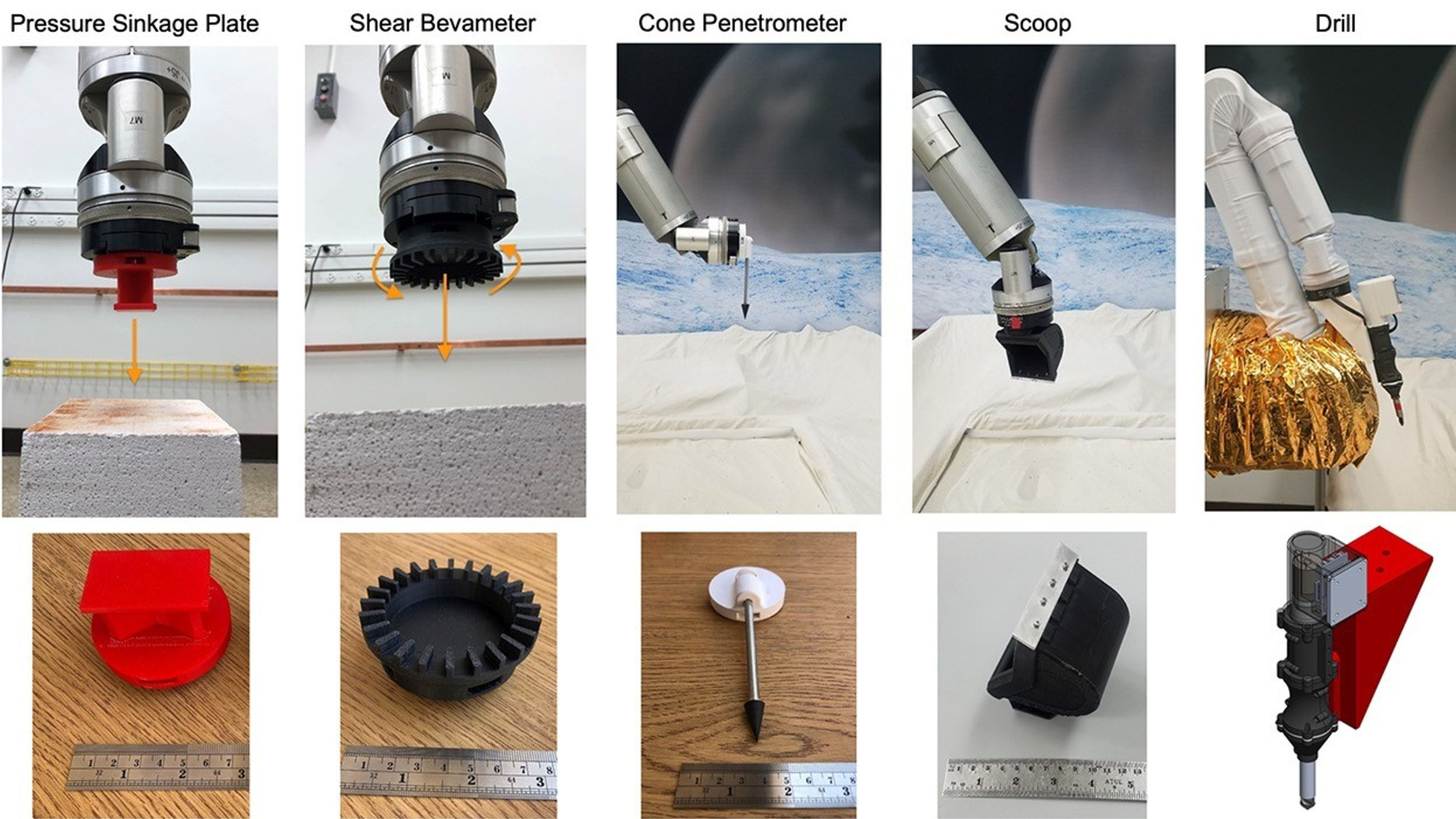

OWLAT sits in the corner of an office at JPL, just a robotic arm on a test bench in front of an artist’s mural of the rugged, frozen surface of an ocean moon. OWLAT is designed to physically replicate how a robotic arm might operate in the low-gravity environment of one of these moons. With the arm, engineers can simulate scooping material off the surface, drilling or penetrating into the ice, as well as measuring the ice’s properties by employing a shear bevameter (which judges a surface’s ability to take the load of a wheeled vehicle on it) or a pressure sinkage plate (which measures how much the ground sinks when pressure is applied).

The arm features a pan-and-tilt camera to inspect what the tools have done, and seven degrees of freedom to allow the arm to perform complex motions. Built-in force and torque sensors measure the motion and reaction response of the arm, feeding it back to the Robot Operating System. Researchers can simulate faults, technical failures and hazards to see how the arm’s autonomous software reacts to problems without needing assistance from home.

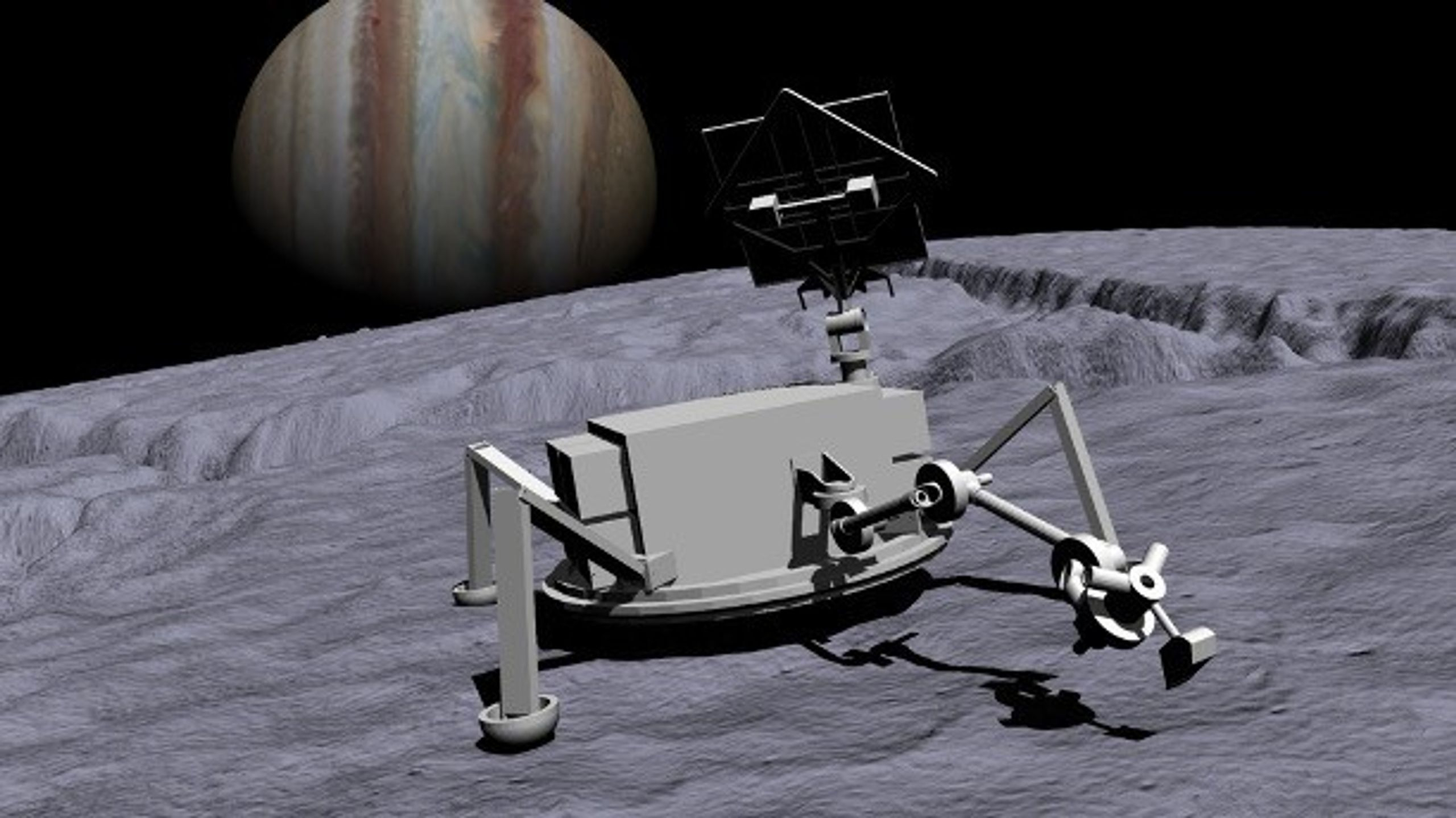

Meanwhile, OceanWATERs does the same thing, but in virtual reality and with a complete lander based on a 2016 design study, not just a robotic arm. A variety of detailed terrain models can be selected for the simulation, not just variations of icy moons, but also Earth’s Atacama Desert in Chile, which is often used as a stand-in for a bleak environment. Thanks to OceanWATERS’ Generic Software Architecture for Prognostics (GSAP) tool, one of the things that the simulation can model is battery power: how much power is consumed by the lander performing certain actions, and how much life the battery has left in it.

Both OWLAT and OceanWATERs are based on the same Robot Operating System, which is autonomous software that receives telemetry from the robot’s sensors and issues commands in response. Through the Robot Operating System, various mission goals can be simulated, and fault-correction software based on A.I. can address problems when they arise.

Fault recognition and prevention were a key focus of recent research by six teams, all of which used OceanWATERS and three of which also involved OWLAT, with the intention of furthering the development of software that could one day be used on a lander on an icy ocean moon for real. For example, they were able to improve decision-making software by training it with reinforcement learning techniques, develop automated planning that a lander could employ to maximize the science it can do should communications with Earth be interrupted, and write software that is able to autonomously adapt should the terrain it lands on or digs into not be what was expected, requiring a degree of adaptation.

Related: Europa Clipper: A complete guide to NASA’s astrobiology mission

There are a number of large, icy moons in the outer solar system, and many are thought to contain oceans, so there’s no shortage of targets for a future lander to visit.

Currently, Europa and the Saturn satellite Enceladus are the prime targets, primarily because of their potential to host life in their oceans; at Saturn, which is 1.4 billion kilometers (870 million miles) from the sun, the time lag is even worse. Nevertheless, the European Space Agency (ESA) successfully landed its Huygens probe on Saturn’s moon Titan in January 2005, so touchdowns on these distant, frigid moons are possible.

NASA’s Europa Clipper spacecraft is now on its way to investigate the eponymous Jovian moon, while ESA’s Jupiter Icy Moons Explorer (JUICE) is also en route to perform two flybys of Europa as well as conduct in-depth studies of Europa’s fellow Galilean moons Ganymede and Callisto, eventually entering into orbit around Ganymede.

While neither Europa Clipper nor JUICE will land, their high-resolution exploration of these icy moons will give mission planners a much better idea of what to expect when they do come to launch a lander mission, and where the best sites to search for evidence of life in these alien oceans might be. When those missions finally do happen, they’ll be the smartest spacecraft ever launched, and their robotic IQ will be traceable all the way back to a robot arm in a lab, and a virtual reality simulation.

Read our previous article: ‘Dune: Prophecy:’ ‘Twice Born:’ Plans go awry, people die, and what’s behind those blue eyes?